Natoma Blog

How to Accelerate Enterprise AI Adoption: The 5-Pillar Framework

Oct 27, 2025

Paresh Bhaya

Technical tips

TL;DR —

Accelerating enterprise AI adoption requires the right foundation, not more pilots. Organizations deploying protocol-based infrastructure like Model Context Protocol (MCP) move from experimentation to production in weeks instead of quarters. This guide provides CIOs and innovation leaders with a proven 5-pillar framework for scaling AI adoption: standardized integration layer, automated governance, rapid deployment capability, organizational readiness, and measurement systems. The result: deploy AI tools in minutes instead of months while maintaining enterprise-grade security and control.

Key Takeaways

Enterprise AI adoption has reached an inflection point - 80% of organizations report using AI, with $252B invested in 2024, but most struggle to move from pilot to production due to integration complexity and governance bottlenecks

The foundation problem determines success - Less than one-third of organizations follow AI adoption best practices; without protocol-based infrastructure, custom integration work creates 3-6 month deployment cycles that make scaling impossible

Protocol-based architecture transforms economics - Model Context Protocol (MCP) eliminates the N×M integration problem, reducing deployment time from months to minutes and cutting integration costs by 90%+

Five pillars create AI-ready foundations - Standardized integration layer (MCP), governed access and control (automated policies), rapid deployment capability (minutes not months), organizational readiness (change management), and measurement framework (track adoption to ROI)

Governance accelerates when automated - Policy-as-code enforcement, automated compliance checks, and embedded security controls enable deployment speed while maintaining enterprise protections: governance as enabler, not blocker

Speed creates compounding competitive advantage - Organizations deploying AI 100x faster than competitors build institutional knowledge, attract better talent, iterate on use cases, and develop sustained advantages that late adopters can't easily match

Systematic execution beats enthusiasm - The 30-60-90 day roadmap (foundation setup, pilot expansion, enterprise production) provides proven path from experimentation to scaled deployment with measured ROI

Why Enterprise AI Adoption Can’t Wait

Enterprise AI adoption has reached an inflection point. According to Gartner, 40% of enterprise applications will feature AI agents by 2026, a dramatic acceleration from current adoption rates. This isn't a gradual shift; it's a competitive transformation happening in real time.

The data confirms the urgency. Nearly 80% of organizations now report using AI, with corporate investment hitting $252.3 billion in 2024, a 44.5% year-over-year increase. After years of sluggish uptake, business adoption has accelerated dramatically, and generative AI startup funding has nearly tripled.

The reality tells a different story: while AI investment and experimentation have exploded, most organizations struggle to move from pilot to production. The gap between AI enthusiasm and AI implementation has never been wider.

This creates three distinct pressures on enterprise leaders:

Competitive Pressure: Your competitors are deploying AI tools that improve productivity, reduce costs, and enhance customer experiences. Every quarter you delay represents lost ground in operational efficiency and market positioning.

Talent Expectations: Top engineers and knowledge workers now expect AI-augmented workflows. Organizations without modern AI infrastructure face talent retention challenges as the best employees seek employers who provide cutting-edge tools.

Board and CEO Demands: AI has moved from IT curiosity to board-level strategic priority. CEOs face pressure to demonstrate AI adoption progress, creating urgency for CIOs and innovation leaders to show tangible results.

According to McKinsey, September 2025, AI is bringing the largest organizational paradigm shift since the industrial and digital revolutions. The length of tasks AI can complete has doubled every 7 months since 2019 and every 4 months since 2024, reaching approximately 2 hours of autonomous work. By 2027, AI systems could potentially complete four days of work without supervision.

But speed without foundation creates new problems. Rushed AI deployments lead to security gaps, governance failures, integration complexity, and ultimately, pilot purgatory.

Why Do Most AI Adoption Efforts Stall?

The uncomfortable truth about enterprise AI adoption: less than one-third of organizations follow proven adoption and scaling practices for generative AI. This isn't a technology problem; it's a foundation problem.

Most AI adoption efforts follow a predictable pattern:

Phase 1: Enthusiasm - A business unit requests access to ChatGPT, Claude, or another AI tool. The use case is compelling, the ROI is clear, and everyone is excited.

Phase 2: Custom Integration - IT begins building a custom integration. The AI vendor has a unique API. Your enterprise systems (Salesforce, GitHub, Slack, internal databases) each have different authentication methods. What seemed simple becomes 3-6 months of development work.

Phase 3: Security Review - InfoSec raises valid concerns about data access, compliance, and audit trails. The review process adds another 6-12 weeks or gets stuck indefinitely in approval limbo.

Phase 4: Pilot Success - Finally, after 4-6 months, a small pilot launches. Users love it. Productivity improves. The business case is validated.

Phase 5: Scaling Stalls - But now you have 15 more AI tools requested across different departments. Each requires the same 4-6 month custom integration and security review cycle. The math becomes impossible: 15 tools × 5 months = 75 months of sequential work. The organization gets stuck with dozens of successful pilots that never scale.

This is pilot purgatory, and it's where most enterprise AI initiatives die.

The Root Causes of Stalled Adoption

Integration Complexity: Every AI vendor has a different API. Every enterprise system has different authentication requirements. The result is exponential custom work: N AI tools × M data sources = N×M integrations to build and maintain.

Security and Governance Delays: Manual security reviews for each AI tool create 6-12 month bottlenecks. Without automated governance, every new integration requires legal review, risk assessment, and compliance validation, all done by hand.

Technical Debt Accumulation: Point solutions proliferate across the organization. Each custom integration becomes legacy code to maintain. Update one system, and you break three AI integrations. The technical debt compounds until the infrastructure becomes fragile and unmaintainable.

Lack of Standardization: Without common protocols, vendor lock-in becomes severe. Switching AI providers means rebuilding all integrations from scratch. This creates strategic rigidity exactly when the market demands flexibility and rapid iteration.

Organizational Resistance: Employees see AI pilots come and go. After the third failed scaling attempt, change fatigue sets in. "Just another initiative that won't ship" becomes the prevailing attitude.

The missing ingredient? A protocol-based foundation that eliminates integration work, automates governance, and enables deployment at scale.

What Is the Right Foundation for Enterprise AI Adoption?

The enterprises deploying AI successfully have discovered a different approach: protocol-based integration instead of API-based point solutions.

Think about how the web works. You don't need custom integration code to visit a new website. Every browser speaks HTTP, every website serves HTTP: it's a universal standard. This standardization enabled the internet to scale from thousands to billions of sites.

Now imagine if every website required custom integration code. The web would have died in 1995.

That's exactly where enterprise AI integration stands today, except a new standard is emerging to solve it.

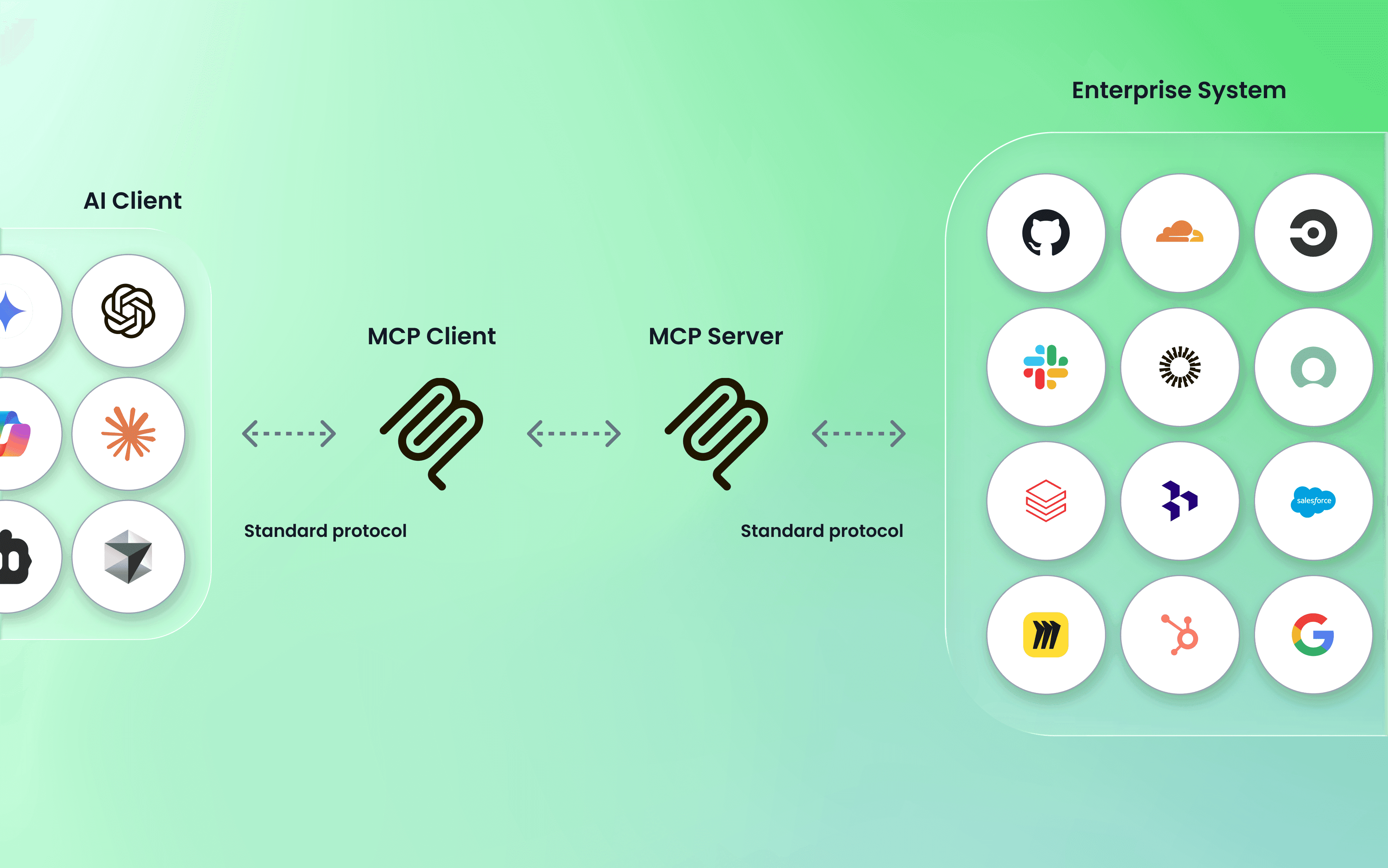

Introducing Model Context Protocol (MCP)

Model Context Protocol is an open standard for AI-to-system communication, developed by Anthropic and adopted by major AI vendors including OpenAI, Google, and Microsoft. MCP defines how AI tools access enterprise data and systems through standardized interfaces.

Here's how it works:

MCP Client: Built into AI tools like ChatGPT, Claude, and other agents MCP Server: A connector to your enterprise system (Salesforce, GitHub, Slack, etc.) MCP Protocol: The standard communication format between them

The breakthrough: Any AI tool with an MCP client can use ANY MCP server. One Salesforce MCP server works with every AI tool. One GitHub MCP server supports ChatGPT, Claude, and every future AI agent that adopts the standard.

No custom integration needed. No vendor lock-in. No exponential complexity.

Protocol-Based vs. Point Solutions: The Economics

Traditional API-Based Approach:

Connect AI Tool A to Salesforce: 500 hours of custom development

Connect AI Tool B to Salesforce: Another 500 hours (different API)

Connect AI Tool A to GitHub: Another 500 hours

Connect 10 AI tools to 10 systems: 100 custom integrations

Protocol-Based MCP Approach:

Deploy 10 MCP servers (one per system): 20 minutes each

Connect any AI tool with MCP client: Zero additional work

Total integrations maintained: 10 servers (not 100 custom APIs)

Dimension | Point Solutions | Protocol-Based (MCP) |

|---|---|---|

Deployment Time | Months per tool | Minutes per tool |

Integration Work | Custom per vendor | Standardized |

Vendor Lock-In | High | Low (open standard) |

Maintenance | Ongoing per tool | Centralized |

Scalability | Linear (N tools) | Platform (1→N tools) |

This isn't incremental improvement; it's a transformation in deployment economics.

Five Pillars of an AI-Ready Foundation

Building an AI-ready foundation requires more than just technology. Based on research from McKinsey, MIT, and leading enterprises, successful AI adoption rests on five interconnected pillars:

Pillar 1: Standardized Integration Layer

The foundation starts with protocol-based connectivity. Organizations implementing MCP eliminate custom integration work by providing standardized interfaces between AI tools and enterprise systems.

What this looks like in practice:

100+ pre-built, verified MCP servers for major enterprise systems

One-click deployment for new integrations

Support for Salesforce, GitHub, Slack, Jira, ServiceNow, databases, and cloud platforms

Zero-maintenance infrastructure that updates centrally

The economic impact: Reduce 3-6 month integration timelines to 15-30 minutes. Eliminate 90% of custom development costs.

Pillar 2: Governed Access and Control

Speed doesn't mean sacrificing security. The right foundation provides automated governance that enables rapid deployment while maintaining enterprise controls.

Essential governance capabilities:

OAuth 2.1 integration with enterprise SSO (single sign-on)

Role-based access control (RBAC) with right-sized permissions

Comprehensive audit trails tracking who accessed what data and when

Anomaly detection and alerting for unusual usage patterns

Automated compliance checks for SOC2, ISO 27001, GDPR, and industry regulations

The key difference: Policy enforcement happens automatically through code, not through 6-month manual review processes. Security teams define policies once, and the platform enforces them consistently across all AI tools.

Pillar 3: Rapid Deployment Capability

Protocol-based foundations transform deployment velocity. What used to take quarters now happens in minutes.

Deployment transformation:

Server Selection: Browse verified MCP servers, select pre-built integration (5 minutes)

Configuration: One-click deployment with automatic OAuth setup (5 minutes)

Validation: Automated security checks and connectivity testing (5 minutes)

Production Rollout: User assignment and monitoring activation (5 minutes)

Total time from request to production: 20 minutes versus 4-6 months.

This isn't just about speed; it's about enabling experimentation. When deployment takes minutes instead of months, you can test 10 AI tools in the time competitors deploy one. Faster iteration leads to better tool selection, higher productivity, and sustained competitive advantage.

Pillar 4: Organizational Readiness

Technology alone doesn't ensure adoption success. According to McKinsey, September 2025, 89% of organizations still operate with industrial-age models, while only 1% act as decentralized networks. Organizational transformation is essential.

Critical organizational elements:

Executive Alignment: AI as a board-level strategic priority with clear sponsorship

Change Management: Structured approach to communicate vision, address fears, and build momentum

Training and Enablement: Multi-tier programs from basic AI literacy to hands-on tool training

Quick Wins Strategy: Early successes that demonstrate value and build confidence

Organizations that treat AI adoption purely as a technology project consistently underperform those that address culture, skills, and change management systematically.

Pillar 5: Measurement and Iteration

You can't improve what you don't measure. Successful AI foundations include comprehensive analytics to track adoption, productivity, and business outcomes.

Essential metrics:

Adoption Metrics: Number of AI tools deployed, active users per tool, use case coverage

Productivity Metrics: Time saved per workflow, tasks automated, output quality improvements

Speed Metrics: Deployment velocity, time from request to production

Business Metrics: ROI calculation (cost savings + value created), competitive positioning

The measurement framework should track both leading indicators (adoption rates, deployment speed) and lagging indicators (productivity gains, business impact).

From Months to Minutes: The Deployment Speed Advantage

Deployment speed isn't just about convenience; it's a strategic advantage that compounds over time.

Research from DevOps Research and Assessment (DORA) shows that elite performers deploy 100 times more frequently than low performers. This deployment frequency correlates directly with business outcomes: revenue growth, profitability, market share, and customer satisfaction.

The same principle applies to AI adoption.

Traditional AI Deployment Timeline

Weeks 1-4: Requirements and Planning

Business requirements gathering

Technical architecture design

Security requirements definition

Resource allocation

Weeks 5-12: Custom Development

API integration development (unique per vendor)

Authentication and authorization implementation

Data connector development

Error handling and logging setup

Weeks 13-20: Security Review and Testing

Security assessment and penetration testing

Compliance validation

User acceptance testing

Documentation

Weeks 21-24: Production Deployment

Production environment setup

Deployment procedures

Rollback planning

Go-live

Total Timeline: 3-6 months per AI tool

Protocol-Based Deployment Timeline

Minutes 1-5: Server Selection

Browse 100+ verified MCP servers in repository

Select pre-built integration for target system

Review server capabilities and permissions

Minutes 6-10: Configuration

One-click deployment initiation

Automatic OAuth 2.1 authentication configuration with enterprise SSO

Access policy assignment using pre-defined RBAC templates

Minutes 11-15: Validation

Automated security validation checks

Connectivity testing to enterprise systems

Access verification and permission confirmation

Minutes 16-20: Production Rollout

User assignment to authorized teams

Monitoring and alerting activation

Ready for production use

Total Timeline: 20 minutes per AI tool

The Compounding Advantage

This speed differential creates a compounding competitive advantage:

Month 1:

Protocol-based organization: 10 AI tools deployed, early productivity gains measured

Traditional organization: 1 AI tool at 30% completion, still in development

Month 3:

Protocol-based: 30 tools deployed, enterprise-wide adoption building

Traditional: 1-2 tools deployed, still in pilot phase

Month 6:

Protocol-based: 50+ tools, full enterprise AI infrastructure, significant productivity improvements

Traditional: 3-5 tools, perpetual pilot programs, scaling challenges

Month 12:

Protocol-based: Continuous experimentation, rapid iteration, sustained competitive advantage

Traditional: Pilot purgatory, change fatigue, organizational resistance

The organizations deploying AI 100 times faster don't just get productivity benefits; they develop institutional knowledge, attract better talent, iterate faster on use cases, and build compounding advantages that late adopters can't easily match.

How Does AI Governance Accelerate Deployment?

One of the most persistent myths about AI governance is that it must slow down deployment. This false choice (speed or security) keeps organizations stuck in 6-month review cycles.

The reality: Proper governance accelerates deployment by providing confidence to move fast.

Reframing Governance

Traditional governance operates through manual review processes. Every new AI tool triggers a review: legal examines data access, security assesses risks, compliance checks regulations, and risk management evaluates potential issues. This sequential review process adds 6-12 months to deployment timelines.

Protocol-based governance inverts this model. Instead of reviewing each tool manually, you encode policies once and enforce them automatically for all AI tools.

The shift:

From: Manual approval of each AI tool integration

To: Automated policy enforcement through code

From: Periodic compliance audits

To: Continuous compliance monitoring

From: Reactive risk assessment

To: Embedded guardrails that prevent issues

Right-Sized Controls

Effective governance doesn't mean giving AI tools unrestricted access. It means implementing the right controls efficiently:

OAuth 2.1 Integration: Connect to enterprise single sign-on (SSO) systems. Authentication happens through existing identity infrastructure. Users access AI tools with the same credentials they use for all enterprise systems.

Role-Based Access Control (RBAC): Define access policies based on roles, not individual users. Sales reps get access to Salesforce data but not financial systems. Engineering teams access GitHub but not customer databases. Policies scale automatically as teams grow.

Audit Trails and Observability: Every AI interaction is logged: who accessed what data, when, and for what purpose. Full audit trails satisfy compliance requirements and enable security investigations without manual effort.

Anomaly Detection: Automated monitoring identifies unusual patterns: users accessing data outside normal hours, excessive queries, or attempts to access restricted systems. Security teams receive alerts for genuine risks instead of drowning in false positives.

Compliance Automation: Platforms encode requirements for SOC2, ISO 27001, GDPR, HIPAA, and industry-specific regulations. Compliance checks happen automatically during deployment, not through month-long reviews.

The Confidence to Move Fast

When governance is automated and embedded, it provides security teams with the confidence to accelerate. Instead of asking "Is this safe?" for every AI tool, the question becomes, "Are our policies comprehensive?"

This shift enables organizations to deploy AI tools in minutes while maintaining stronger security posture than manual review processes ever provided.

Measuring AI Adoption Success

Successful AI adoption requires disciplined measurement. Without clear metrics, you can't distinguish genuine progress from activity theater.

The Measurement Framework

Tier 1: Adoption Metrics (Leading Indicators)

Number of AI tools deployed enterprise-wide

Active users per tool (daily, weekly, monthly)

Percentage of employees using at least one AI tool

Use case coverage across departments

Deployment velocity (tools deployed per month)

These metrics indicate adoption momentum but don't directly measure value creation.

Tier 2: Productivity Metrics (Leading to Lagging Indicators)

Time saved per user per week (survey or workflow analysis)

Number of tasks automated (quantity and complexity)

Output quality improvements (error rates, revision cycles)

Employee satisfaction scores with AI tools

Feature velocity (engineering teams) or sales cycle time (sales teams)

These metrics connect AI adoption to operational improvements but still require translation to business outcomes.

Tier 3: Business Metrics (Lagging Indicators)

ROI calculation: (Cost savings + value created) / (Infrastructure + licensing costs)

Revenue impact: Increased sales, faster deal cycles, expanded market reach

Cost reduction: Headcount efficiency, operational cost savings

Competitive positioning: Win rates, market share changes

Talent metrics: Retention rates, time to hire, employee engagement

These metrics demonstrate business value to executives and board members but lag behind adoption changes.

Establishing Baselines

The most common measurement mistake is deploying AI tools without capturing baseline metrics. You can't measure productivity improvement if you don't know starting productivity levels.

Pre-deployment baseline capture:

Current time spent on workflows targeted for AI augmentation

Current output volume and quality metrics

Current employee satisfaction with tools and processes

Current deployment timelines for new technology

With baselines established, you can measure actual impact rather than relying on anecdotal evidence.

The 30-60-90 Day Measurement Cadence

Days 1-30: Adoption Metrics

Track deployment progress and user activation

Measure initial usage patterns

Identify early adoption barriers

Gather qualitative feedback

Days 31-60: Productivity Metrics

Compare productivity against baselines

Calculate time savings per user

Assess output quality changes

Identify successful use cases to replicate

Days 61-90: Business Metrics

Calculate initial ROI

Measure business outcome improvements

Present results to executive stakeholders

Plan expansion based on results

This cadence provides early signals of success while building toward comprehensive business impact measurement.

Getting Started: Your 30-60-90 Day Roadmap

Accelerating AI adoption requires systematic execution. Here's a phased roadmap that organizations can follow to move from planning to enterprise-wide deployment:

Days 1-30: Foundation Setup

Week 1: Assessment and Planning

Audit current AI initiatives and shadow AI usage

Identify high-value use cases for initial deployment

Define governance policies and access requirements

Establish baseline productivity metrics

Week 2: Platform Deployment

Deploy MCP Gateway infrastructure (cloud, on-prem, or hybrid)

Integrate with enterprise SSO and identity systems

Configure role-based access control policies

Set up audit logging and monitoring

Week 3: Initial Integrations

Deploy 3-5 high-priority MCP servers

Connect to most-requested enterprise systems (Salesforce, GitHub, Slack, databases)

Validate connectivity and access controls

Test with pilot user group

Week 4: Governance Validation

Confirm audit trails are capturing required data

Verify compliance with security policies

Test anomaly detection and alerting

Present governance framework to security and compliance teams

Deliverables: AI foundation deployed, initial integrations live, governance validated

Days 31-60: Pilot Expansion

Week 5: Early Adopter Rollout

Identify 50-100 early adopter users across departments

Provide hands-on training and enablement

Grant access to AI tools connected through MCP servers

Gather initial feedback and usage data

Week 6: Measure and Iterate

Analyze productivity improvements against baselines

Calculate time saved per user per week

Identify successful workflows to replicate

Address user friction points and feature requests

Week 7: Expand Integrations

Deploy 5-10 additional MCP servers based on user demand

Connect new AI tools requested by business units

Add integrations to additional enterprise systems

Optimize based on usage patterns

Week 8: Build Momentum

Share early success stories and productivity data

Recruit additional early adopters

Conduct lunch-and-learn sessions

Prepare for enterprise-wide rollout

Deliverables: 50-100 active users, productivity gains measured, expansion plan validated

Days 61-90: Scale to Production

Week 9: Enterprise Rollout Planning

Define rollout schedule by department

Prepare training materials and documentation

Plan communication campaign

Allocate support resources

Week 10: Phased Enterprise Deployment

Roll out to first department (e.g., Sales, Engineering, Marketing)

Provide department-specific training

Monitor adoption and address issues

Gather feedback and iterate

Week 11: Continued Expansion

Roll out to additional departments

Add more AI tools and integrations based on demand

Scale support and enablement resources

Optimize based on enterprise usage patterns

Week 12: Measurement and Optimization

Calculate enterprise-wide productivity improvements

Measure ROI against infrastructure costs

Present results to executive stakeholders

Plan next-phase expansion (more tools, use cases, integrations)

Deliverables: Enterprise-wide deployment, ROI validated, sustained adoption trajectory

Frequently Asked Questions

What is enterprise AI adoption?

Enterprise AI adoption is the process of integrating artificial intelligence tools and agents into business workflows at organizational scale. According to Stanford HAI, 2025, nearly 80% of organizations now report using AI. Successful adoption requires the right technical foundation, governance framework, and organizational change management—not just access to AI tools.

Why are most AI adoption efforts failing?

According to McKinsey, 2025, less than one-third of organizations follow AI adoption best practices. Common failure points include integration complexity (3-6 months per tool), governance bottlenecks, and lack of standardization. Organizations without a protocol-based foundation struggle to move from pilot to production, getting stuck in what McKinsey calls "more pilots than Lufthansa" syndrome: successful experiments that never scale.

What is "pilot purgatory" in AI adoption?

Pilot purgatory refers to organizations stuck running successful AI experiments that never scale to production. According to McKinsey, 2025, this happens when each AI tool requires 3-6 months of custom integration work, creating a bottleneck where 15 tools would take 75 months of sequential development. Organizations end up with dozens of validated pilots but no enterprise-wide deployment.

How long does AI deployment typically take?

Traditional AI tool deployment takes 3-6 months per integration, including security reviews, custom development, and testing. Protocol-based approaches using Model Context Protocol (MCP) can reduce this to minutes through standardized integration and automated governance. The deployment time difference (months versus minutes) determines whether organizations can scale AI or remain stuck in pilot purgatory.

What is the difference between API-based and protocol-based AI integration?

API-based integration requires custom development for each AI tool-to-system connection, creating N×M complexity (10 tools × 10 systems = 100 integrations). Protocol-based integration uses open standards like Model Context Protocol (MCP) where one standardized connector works with all AI tools. Think of it like HTTP for the web: you don't need custom code to visit each website because browsers and servers speak the same protocol.

Why can't enterprises just use AI tools directly without integration?

AI tools need access to your enterprise data to be useful: customer records in Salesforce, code repositories in GitHub, team conversations in Slack, business metrics in databases. Without secure, governed integration, employees either can't use AI effectively (no context) or resort to risky workarounds like copying sensitive data into public AI tools. Proper integration provides AI access while maintaining security, compliance, and audit controls.

What are the biggest AI adoption barriers in enterprises?

Research from Gartner and McKinsey identifies top barriers: integration complexity (3-6 months per tool), security review delays (6-12 months), lack of organizational readiness, unclear ROI measurement, and vendor lock-in. Organizations that address these systematically through protocol-based foundations, automated governance, and structured change management accelerate adoption significantly. Technology alone isn't sufficient; organizational transformation matters.

Take the Next Step

The enterprises winning with AI aren't experimenting with more pilots; they're building the right foundation for sustained, scaled adoption.

Natoma's MCP Platform provides the protocol-based infrastructure that accelerates deployment from months to minutes while maintaining enterprise governance and control. With 100+ pre-built MCP servers, one-click deployment, and automated policy enforcement, organizations move from pilot purgatory to enterprise-wide production in weeks, not quarters.

Ready to accelerate your AI adoption? Learn how Natoma transforms enterprise AI deployment at natoma.ai.