Natoma Blog

How to: Enabling MCP in Windsurf

Jul 15, 2025

Pratyus Patnaik

Windsurf is a powerful AI-native coding environment that supports direct integration with Model Context Protocol (MCP) servers. By connecting Windsurf to MCP, you enable rich, real-time context injection into AI agents, enabling them to reason over internal tools, documents, tickets, alerts, and more—with precision and permissioning built-in. This setup allows you to inject real-time, relevant context from tools like GitHub, Jira, and Asana directly into your AI workflows within Windsurf. It also enables dynamic access to codebases, tasks, and system data, enhancing your developer productivity with high-fidelity contextual understanding.

This step-by-step guide will walk you through three methods for integrating Windsurf with an MCP server using two examples: GitHub, Asana, and Datadog.

MCP Server Types Supported

Windsurf supports the following types of MCP servers:

Local Stdio: Runs on your machine and streams responses via standard input/output (stdio).

Streamable HTTP: The server operates as an independent process that can handle multiple client connections

Step 1: Enable MCP in Windsurf

Open the Windsurf editor.

Log into your Windsurf account

Press

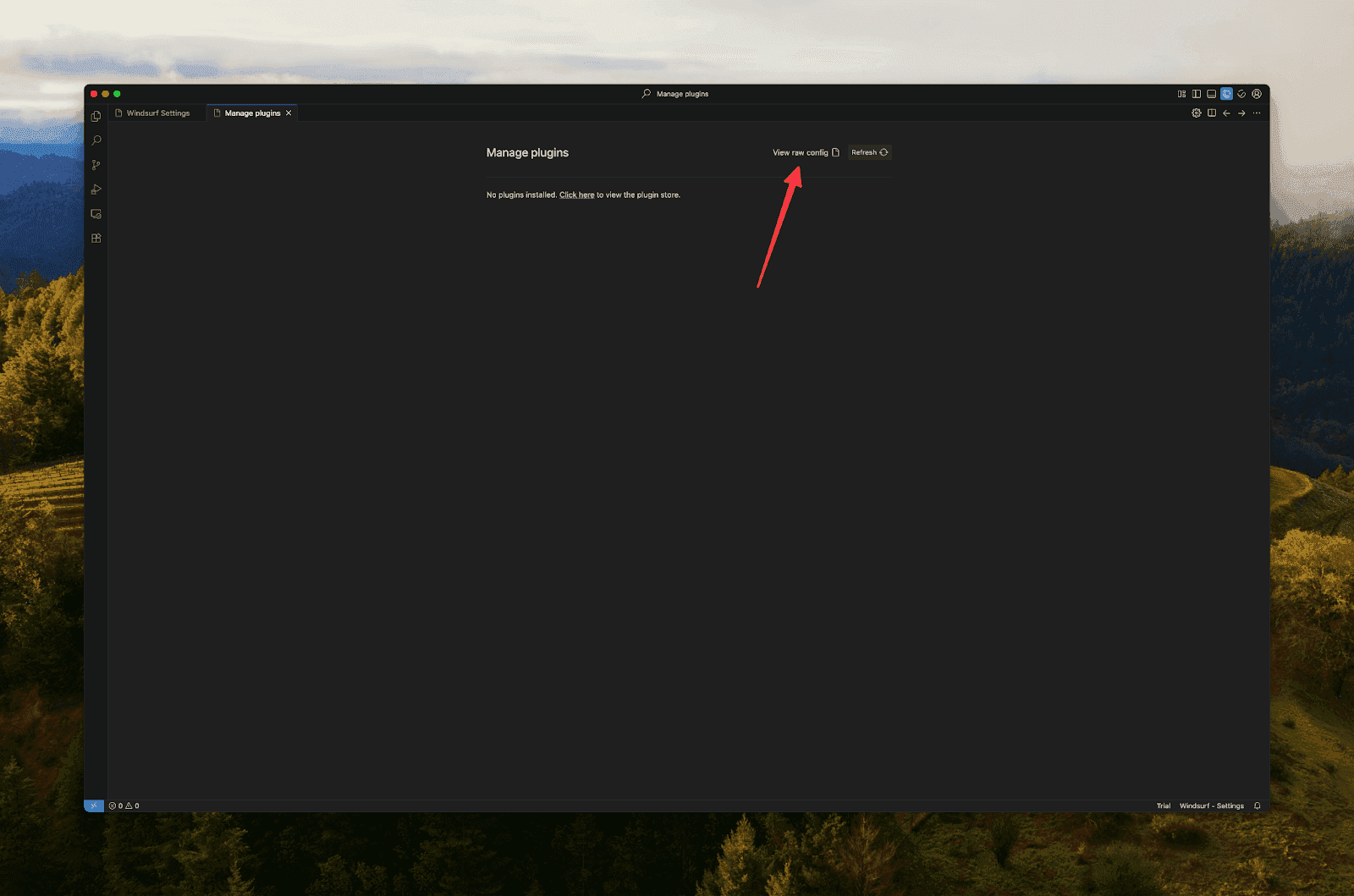

Cmd/Ctrl + ,to open "Windsurf Settings".Scroll to “Plugins (MCP servers)” under Casacade

Click Manage Plugins

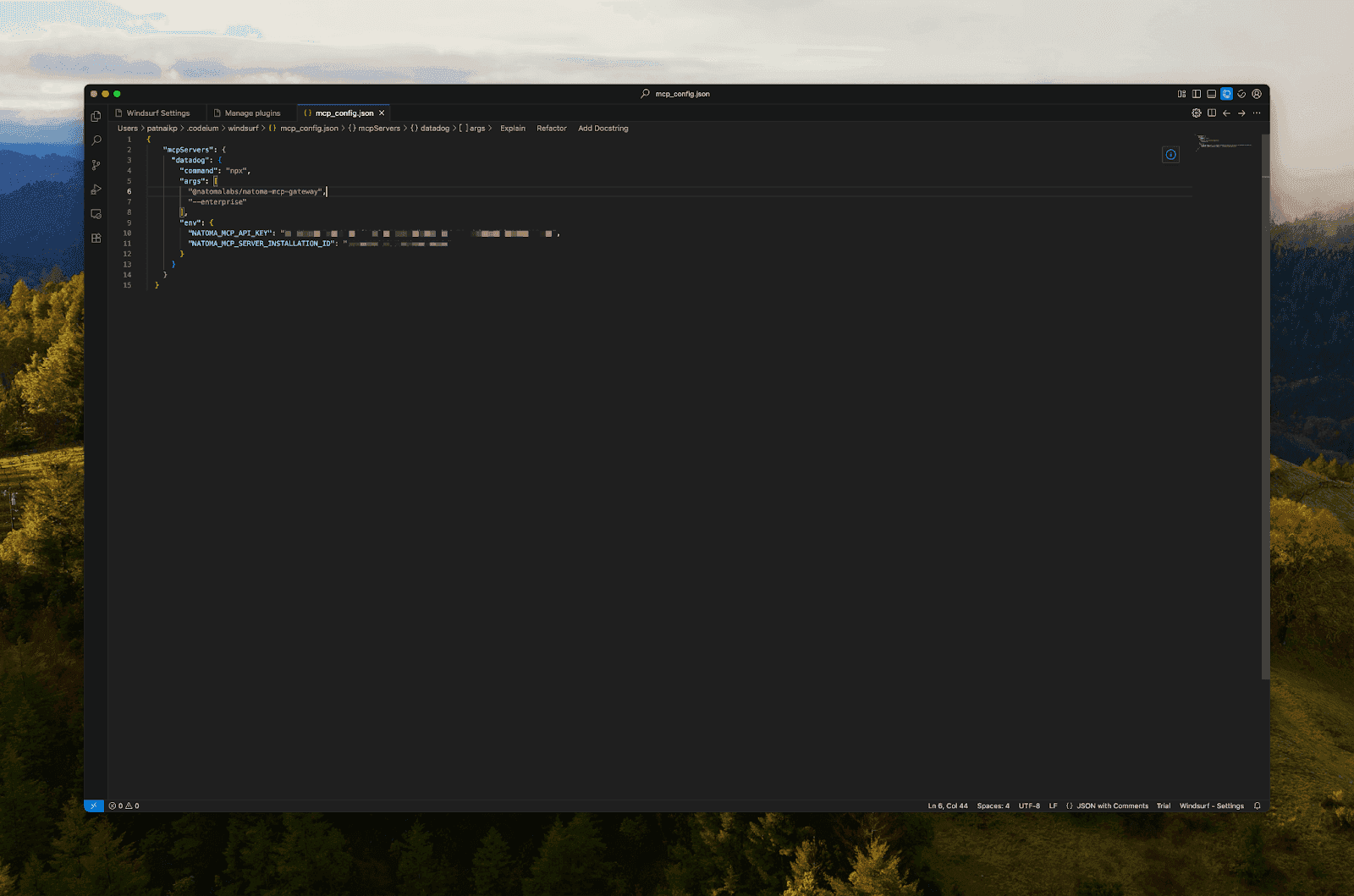

Click on “View raw config”

This will create or open the config file at one of the following paths:

Add or modify your configuration as shown in the examples below.

Step 2: Choose Your MCP Server Setup

Windsurf requires an MCP-compliant server that can respond to context queries. You have three setup options:

Option A: Run the MCP Server Locally

Ideal for development, testing, and debugging.

Instructions:

Install Docker.

Ensure Docker is running.

If you encounter issues pulling the image, try running `docker logout ghcr.io` to refresh your credentials.

Create a GitHub Personal Access Token.

Choose permissions based on what you’re comfortable sharing with the LLM.

Learn more in the GitHub documentation.

Config Example:

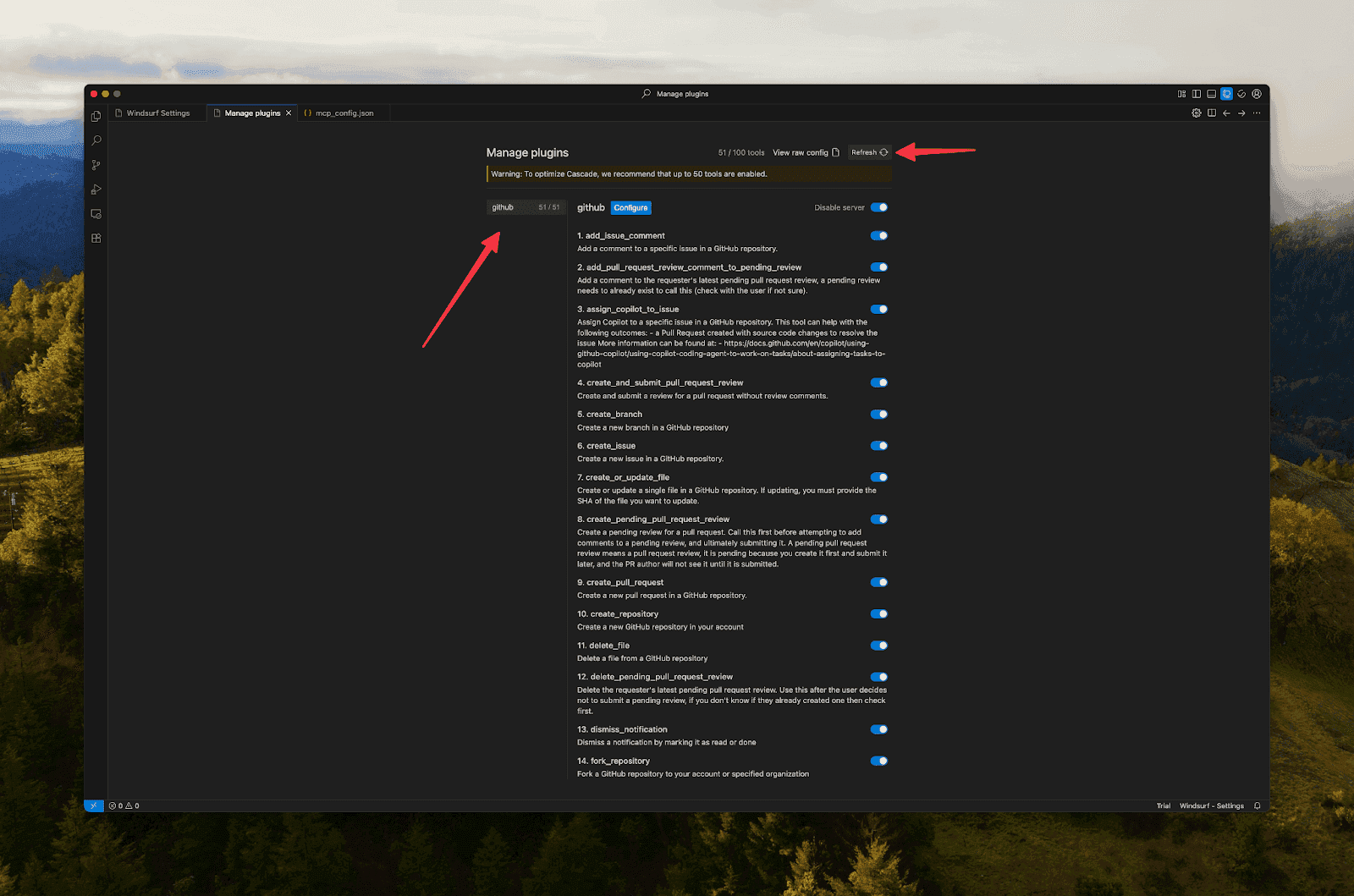

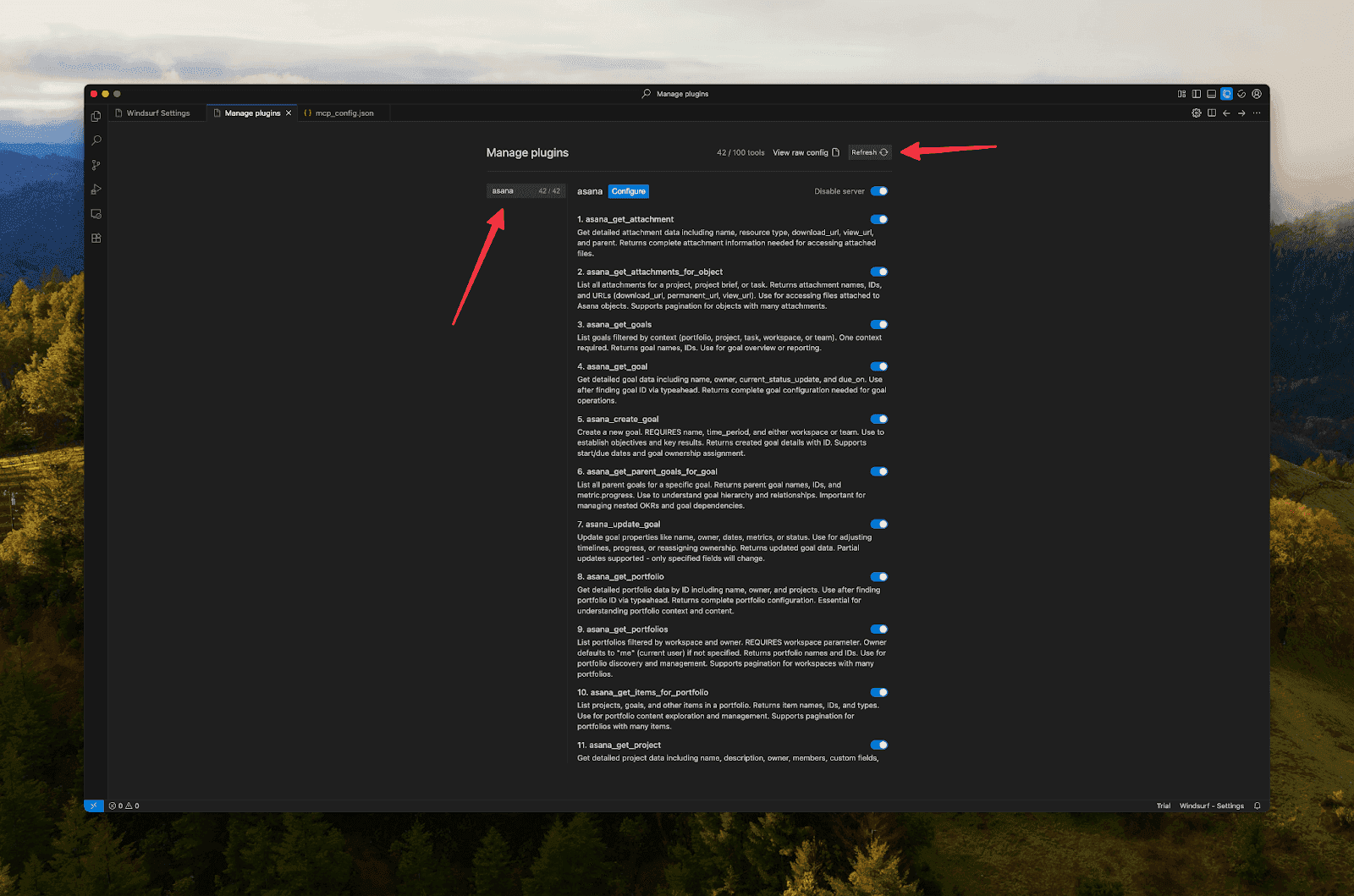

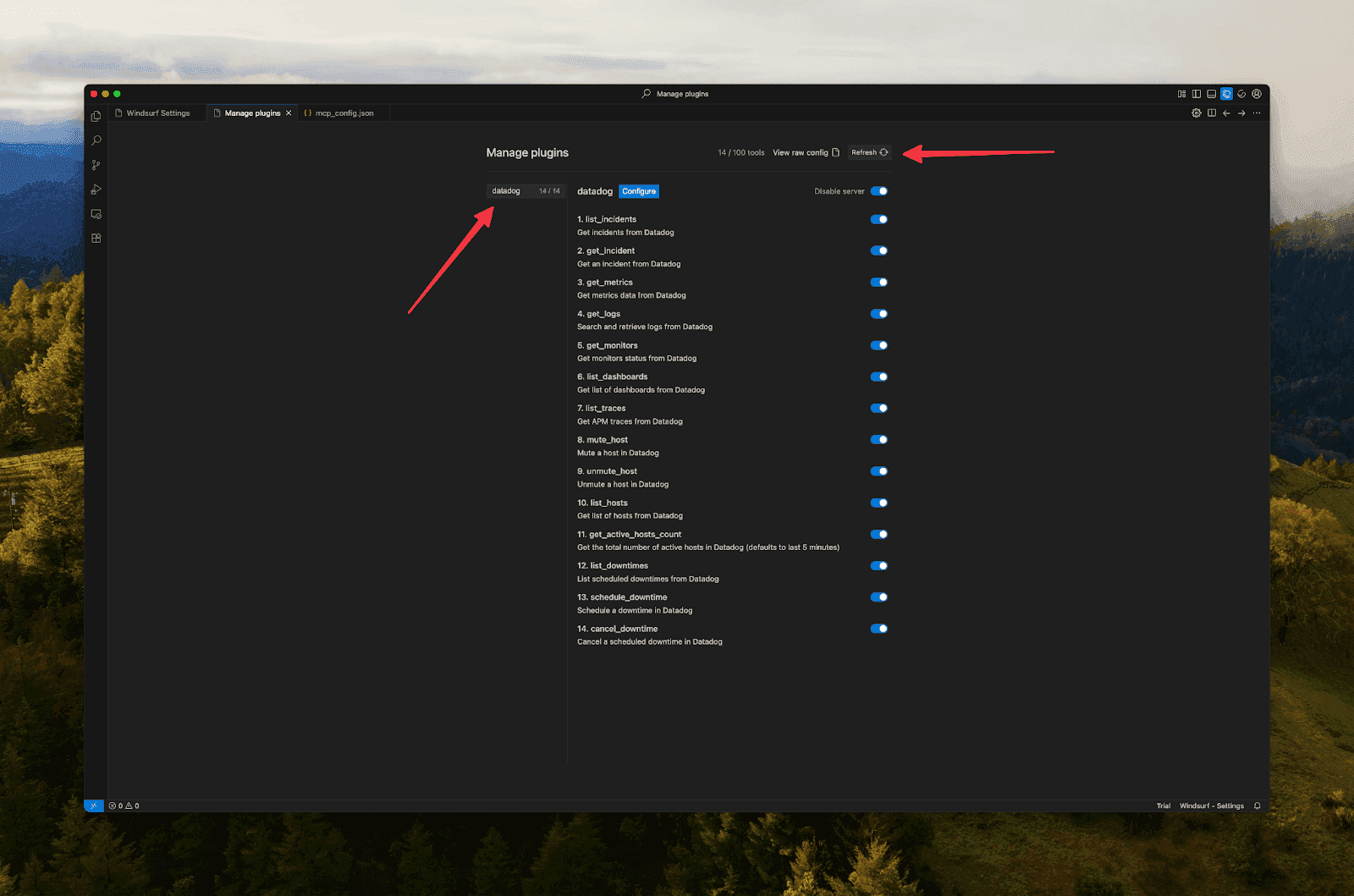

Save & click refresh

Option B: Use a Hosted MCP Server (e.g., Asana, Atlassian)

The easiest option—no setup required. Note that you won’t have central control or visibility into interactions between Windsurf and the integrated service.

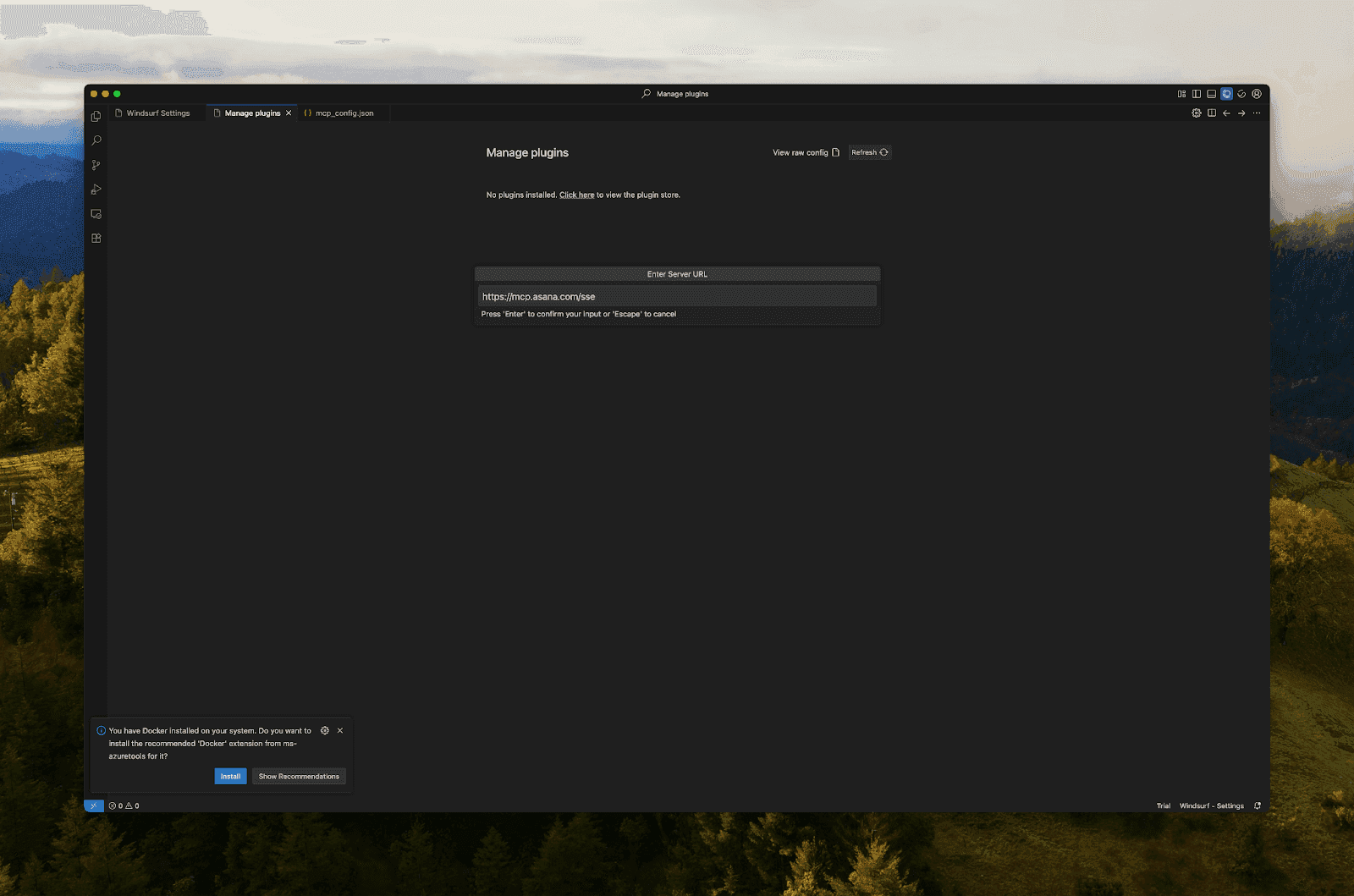

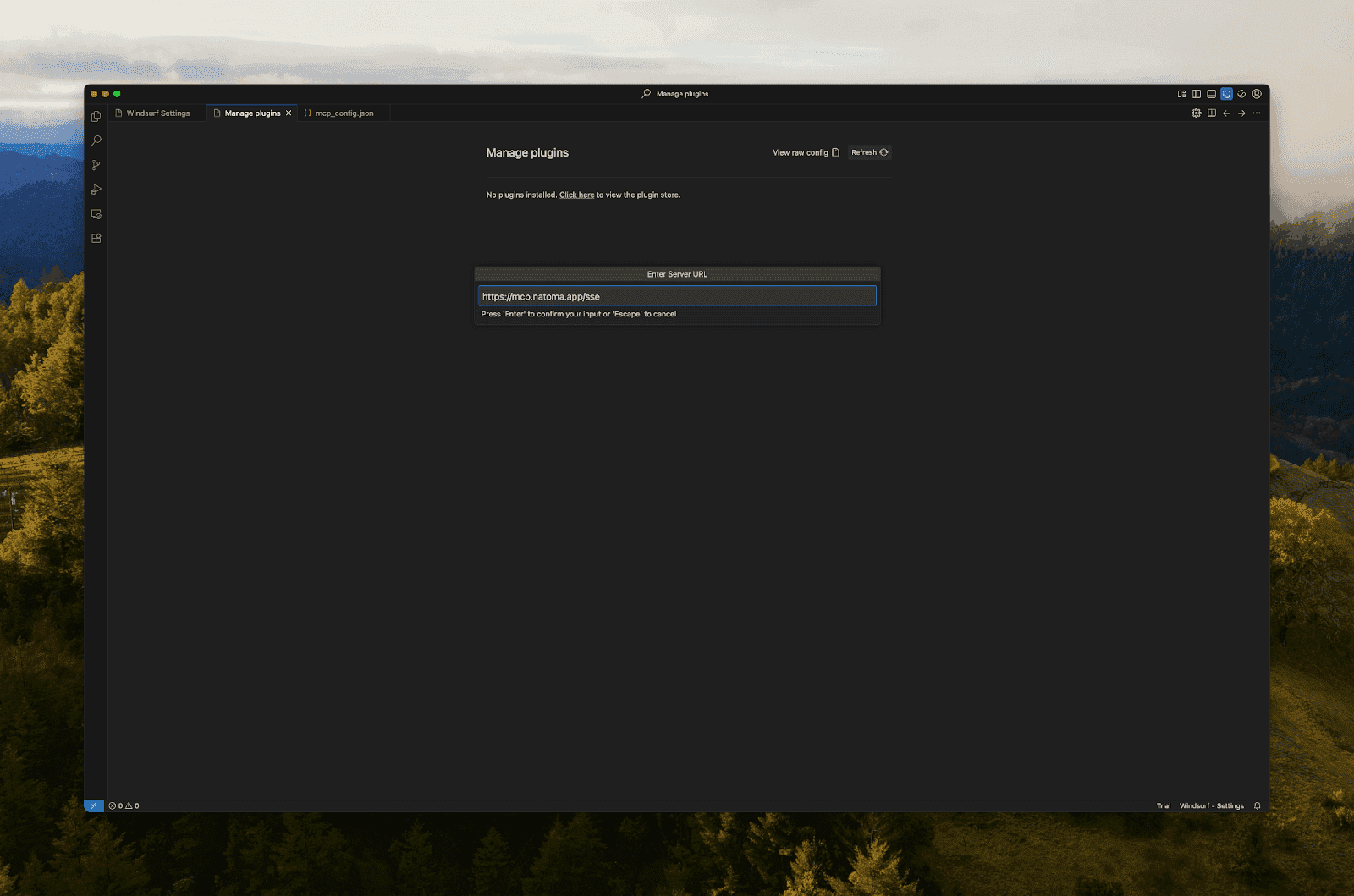

Method 1: Via UI

Press Cmd/Ctrl + Shift + P to open the Command Palette. Search for and select "MCP: Add Server.". And then select "HTTP (server-sent events)"

Method 2: Via Config

Install the `mcp-remote` package.

Config Example:

Save & click refresh

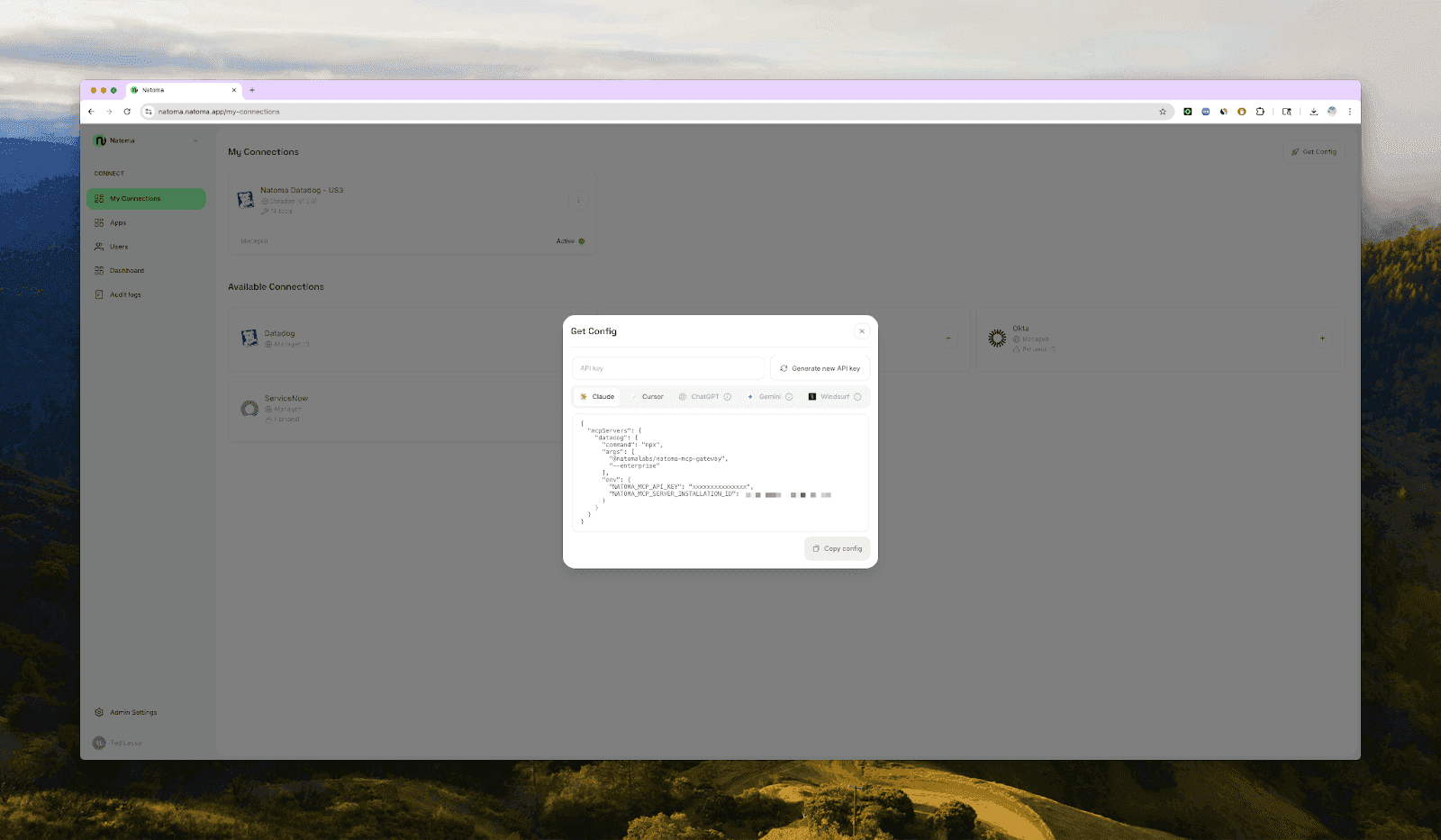

Option C: Deploy Your Own Remote MCP Server

Recommended for teams and production use. This setup offers:

Full visibility and governance

Access control across employees and agents

You can deploy your MCP server using a platform like Natoma. Connect apps from the registry, and retrieve the configuration and paste it into WindSurf IDE. Save & click refresh

or Streamable HTTP endpoint, and paste it into Windsurf IDE

Save & click refresh

Troubleshooting & Testing

To verify MCP integration:

Ask Windsurf a question that requires context from recent activity.

Windsurf will send a background request to the MCP server, which returns structured context.

Monitor Windsurf MCP logs at

Press

Cmd/Ctrl + Shift + Pto open the Command Palette.Search for and select "Developer: Show Logs..".

Click Windsurf

Ensure your MCP server responds with valid JSON in the correct schema.

Use the MCP Inspector for debugging.

Wrap-Up

Integrating Windsurf with an MCP server—whether self-hosted, remote, or SaaS—takes just a few minutes. This unlocks powerful contextual capabilities, allowing you to embed the full history, structure, and semantics of your dev stack into every AI interaction.